10 BEST AI 3D MODEL GENERATORS IN 2026.

19.05.2025

Updated on 01/02/2026

The 3D modeling market is very diverse. Its pipeline can be roughly summarized as follows:

1. Start with a 3D concept: sketch out the image, create a drawing and details.

2. Create basic block-outs or mockups by size (used in gamedev prototyping).

3. Generate background assets (matte painting), and 3D meshes for distant and midground layers, plus texture painting.

4. Create detailed foreground 3D models (modeling, sculpting).

5. Retopology (geometry simplification) for GameDev optimization.

6. Rigging (bone creation) and skinning (binding bones to mesh) for animated 3D models.

7. Add effects (particles, physics effectors, force fields, etc.) and assemble the scene.

8. Final texturing.

9. Rendering and post-processing.

10. A separate stage is the production for the Metaverse (virtual avatar worlds), AR (augmented reality), VR (virtual reality), and 3D printing.

The pipeline may vary greatly. What's important is that each stage is usually handled by different professionals. Unless you’re a multitasking freelancer, you don’t need to master everything. It’s enough to excel at just one or a few areas. Many top-tier 3D professionals work in a narrow field for years and do just fine.

But now the 3D world is beginning to change. Neural technologies have reached a level where they can replace the early stages of 3D production.

The most advanced AI achievement is generating 3D concepts from different angles. Image generators like FLORA handle this very well.

10. KREA

A multifunctional tool that uses various generators (in this case, an older Hunyuan AI model) combined with its own tools. It can generate 3D models, integrate them into any background in real time to create new images, and even generate complete 3D scenes. The service gives you 100% credits to generate approximately 4 to 7 models. Avoid generating complex cars or characters, as it struggles with those.

9. MESHY AI

A decent generator with auto-rigging functionality and a Blender API. You get 100 credits and 10 uploads per month upon signing up. Best used for base mockups, as the output tends to be simple and has broken topology. Includes a remeshing tool. For complex models, it’s better to generate them in parts.

8. CSM AI

An open-source 3D model generator with paid features for retexturing, retopology, and object segmentation using ChatGPT. Upon login, you receive 10 one-time credits, which are enough for 10 basic simple 3D models or 3–5 more complex ones. The generation quality is weak — the back side of models may appear as black planes. However, this open-source generator is promising and continues to improve.

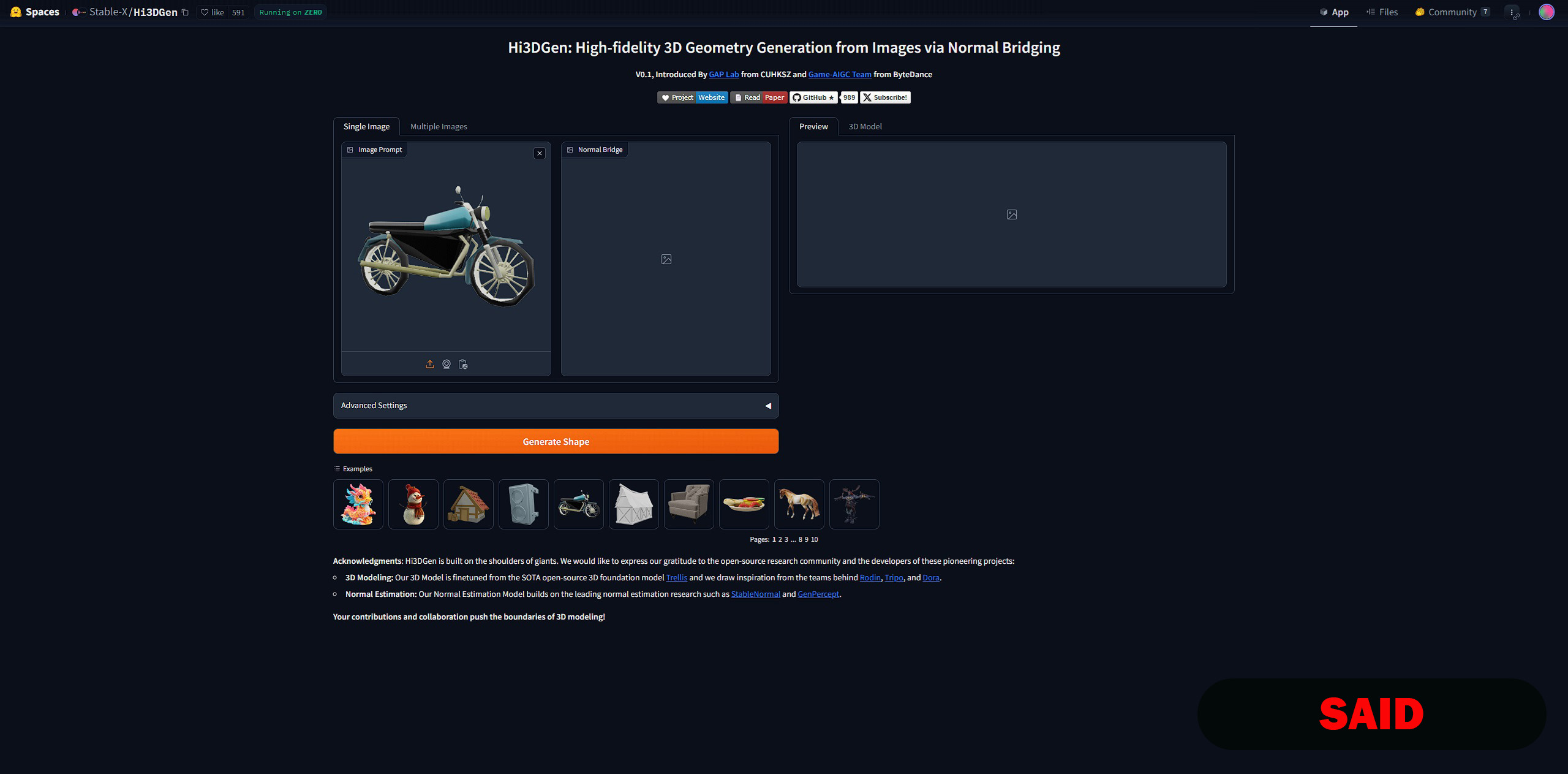

7. Hi3DGen

A 3D generator with normal map support for enhanced detailing, using StableNormal and GenPercept, featuring a simple interface on Huggingface. It also supports Comfy UI in the local version of the SOTA Trellis model. It calculates normals on images and creates mid-quality base meshes with general shapes and no textures. One-time credits are provided on login, enough for 5–10 models.

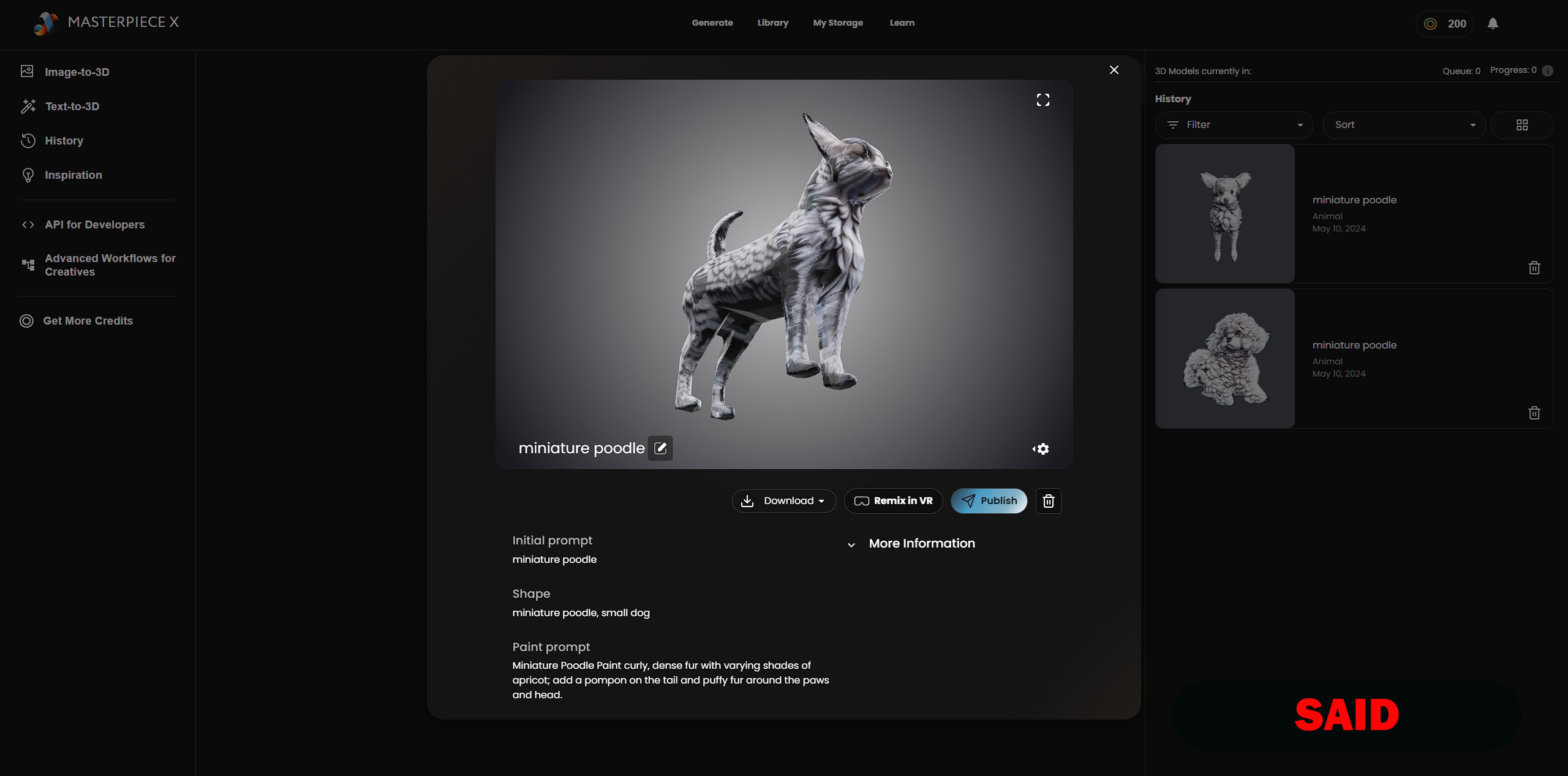

6. MASTERPIECE X

A decent 3D generator from text or images (with transparent background). The free plan offers 200 credits per month, enough for 4 models. The generated results are suitable for remeshing. It works with Comfy UI, making it ideal for those who enjoy flexible, node-based workflows. It has a beautiful interface and simple tools.

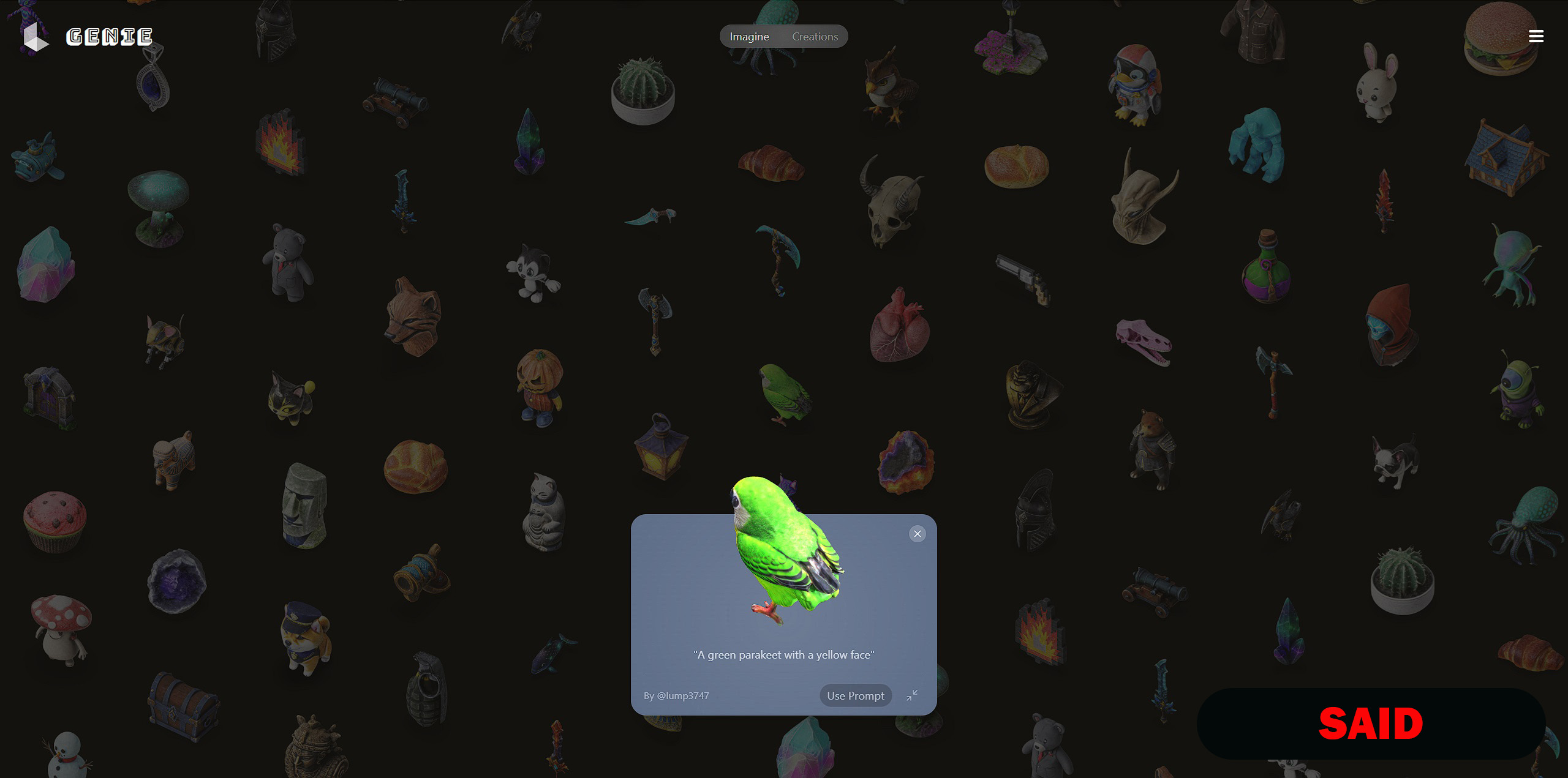

5. GENIE

An interesting, currently free, text-to-3D generator for simple objects by Luma. It can also generate from pre-existing shape templates placed across the website. Models come with blurry textures and slightly broken topology, but they are usable for background elements and scene filling. If needed, the topology can be quickly fixed. It generates 4 models at once — or even 8 with a second click.

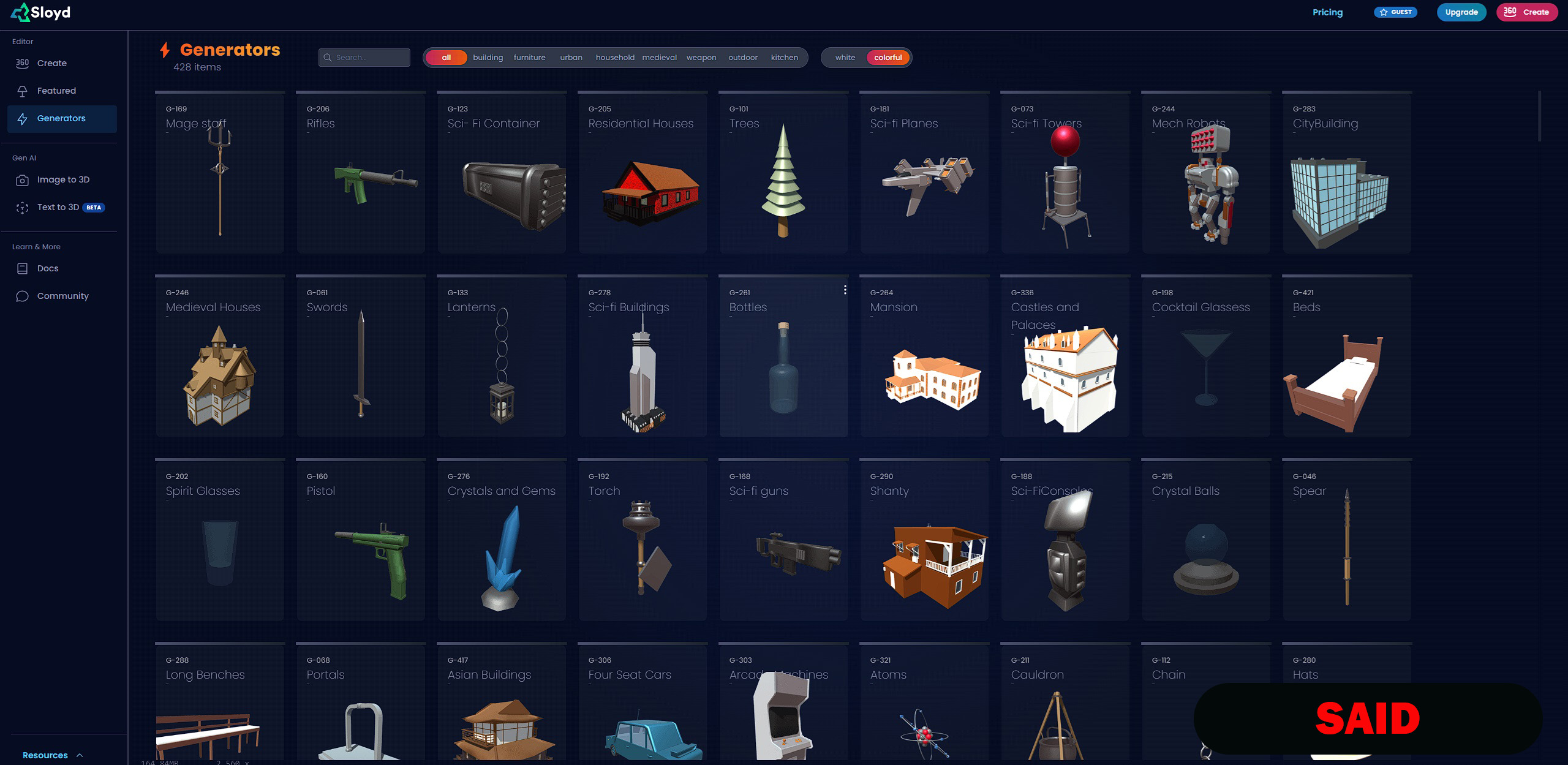

4. SLOYD

A fast 3D generator from text, images, and various templates. The website offers over 400 model templates divided into categories (buildings, streetlights, cars, etc.), which can be parametrically modified in the editor using AI and downloaded for personal use on the free plan. The models are simple but have clean topology. You can create models in three modes: high quality, low poly, and 3D printing. Good texturing tools and a wide variety of materials. Export options include glb, stl, obj, and json. A decent mix of ready-made models and custom configuration.

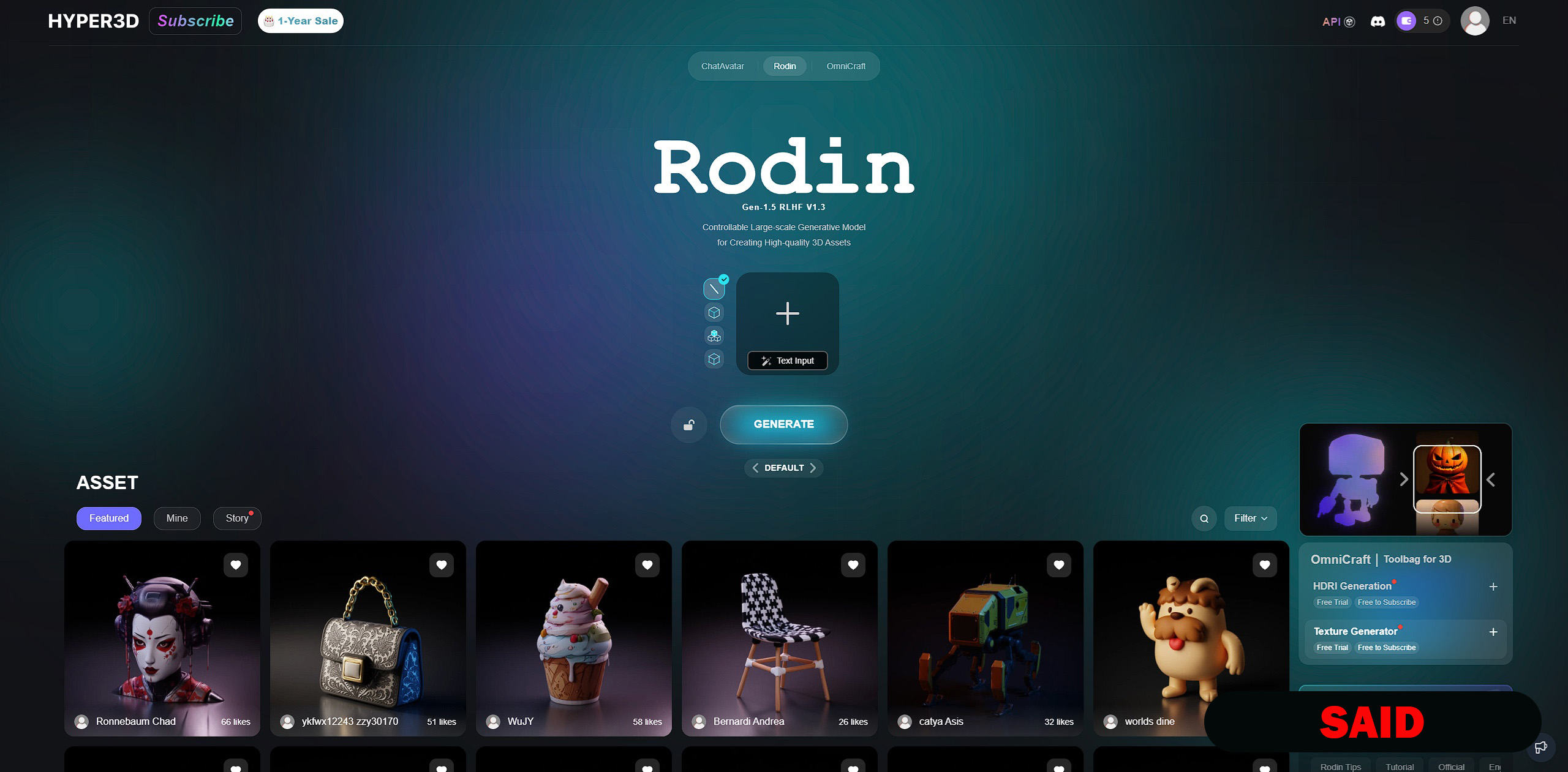

3. RODIN

A 3D generator by Hiper3D that allows generating multiple variations of a 3D model. The one-time free plan provides up to ten 3D models, supports generation from text prompts, and offers export in several popular formats. The paid version includes many additional features like vector-to-3D conversion, HDRI map generation from images, a texture generator, geometry editor, format converter, and more. In June 2025, improvements are planned for topology generation using quads and other enhancements.

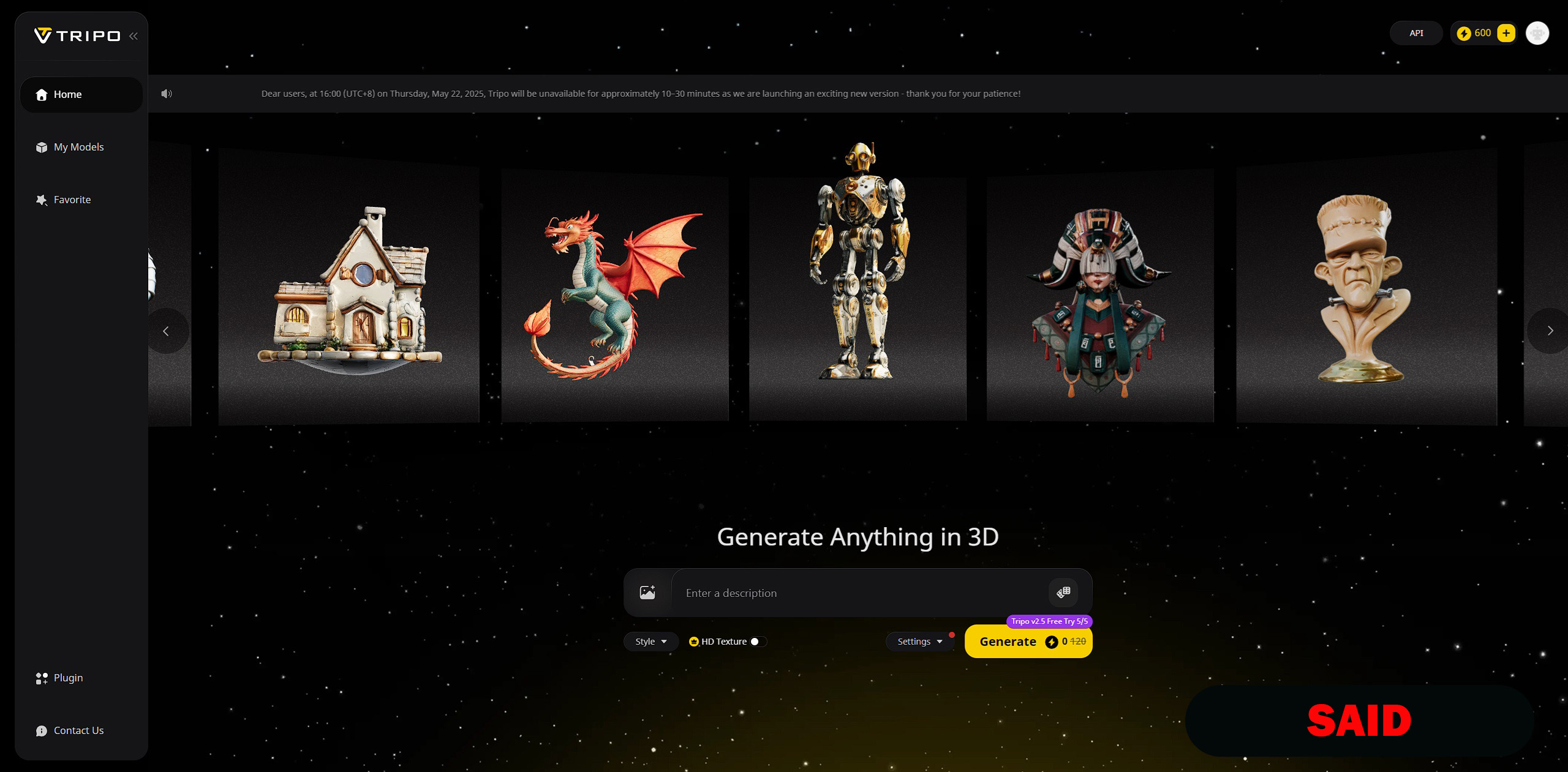

2. TRIPO SG

A high-quality 3D generator available both on HUGGING FACE and its own platform. Users receive 600 monthly credits upon login. Up to 15,000 credits can be earned by inviting friends. With the right approach, you can generate up to 30 models daily for free. It handles even moderately complex models well, making them suitable for retopology. Some issues exist with the mesh and slightly blurry textures, but these can be easily fixed in Blender.

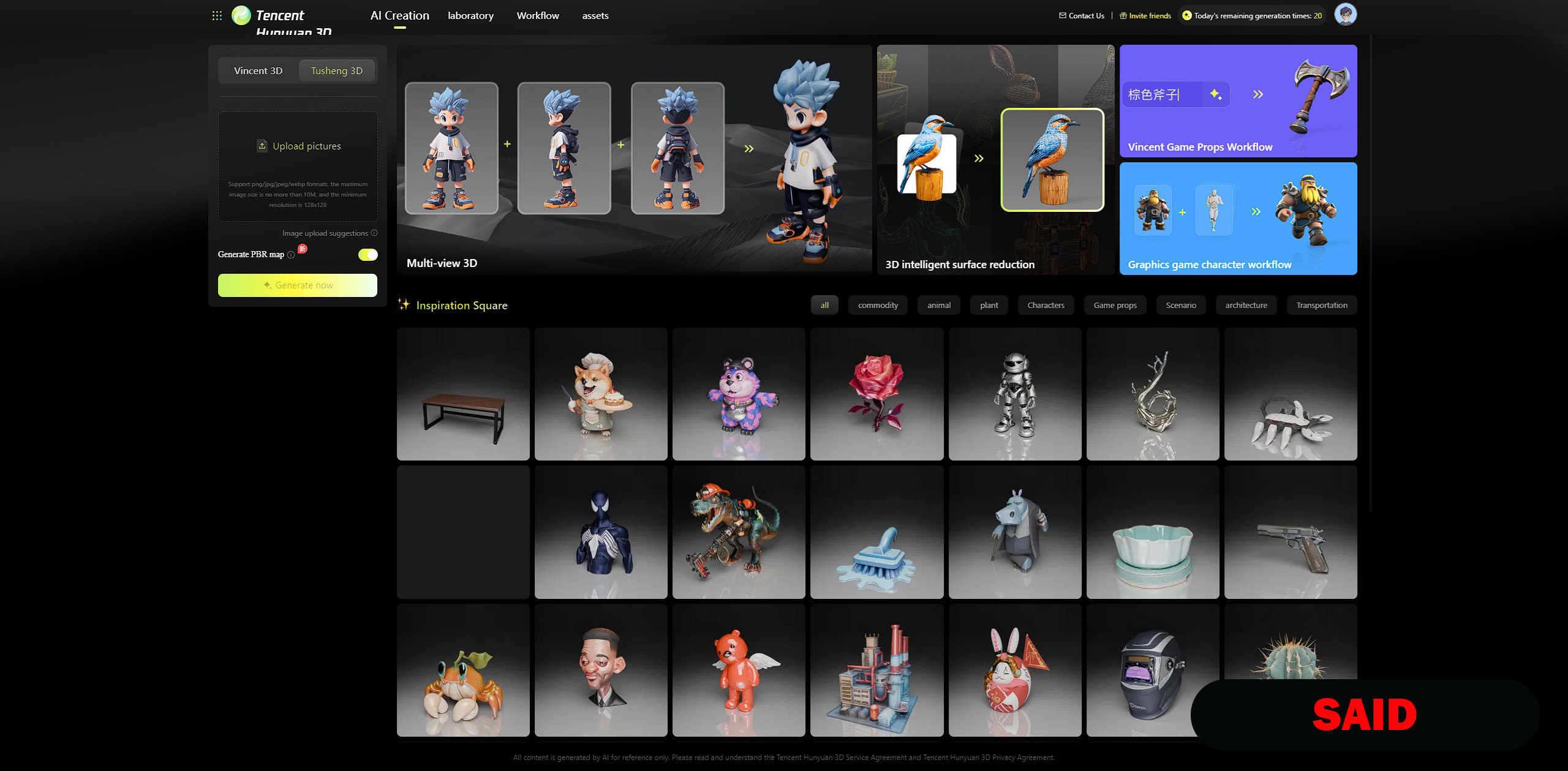

1. HUNYUAN

An excellent Chinese 3D model generator. It works with text in 3D and multiple images in 3D. In the free plan, it provides 20 credits per day, which can be used to create 20 3D models. The generations are of high quality, since you can generate a model by uploading front, side, and back view images. A similar feature is also implemented in the SCENARIO service. On the extended generation service, you can explore its capabilities. The Hunyuan-3D model is available on Hugging Face, as is the Hunyuan3D-Omni model. The owner of the service — TENCENT — has released its own model Hunyuan-GameCraft with open-source code for interactive creation of 3D game environments. You can also try Hunyuan-Game.

The Tencent model HunyuanWorld-Mirror is a general feedforward model for comprehensive 3D geometric forecasting. It integrates various geometric priors (camera poses, calibrated intrinsic parameters, depth maps) and simultaneously generates multiple 3D representations (point clouds, multi-view depths, camera parameters, surface normals, 3D Gaussian functions) in a single forward pass.

As a bonus, you can check out MESHCAPADE – a tool for creating 3D avatars (humans) with animation from templates, scans, photos, and videos, including body and facial motion capture. Registration gives 4,500 credits, enough to create dozens of avatars. The free plan limits video duration to 10 seconds.

Another noteworthy multi-modal content generator is CGDREAM, developed by the large 3D stock SGTrader. It generates 3D models from images. You get 100 free credits daily, enough to generate one 3D model from an image or five images.

The service HITEM3D provides 100 free credits for mesh image generation. Generating one model costs 10 credits. It generates fairly quickly in OBJ, STL, FBX, and GLB formats, but without texture mapping. A decent generator.

On the platform YVO3D, you get 150 free credits per month, with a cost of 30 credits per generation from a text description or image. It generates models with textures right away.

The online generator LEXA3D generates images from text, as well as 3D models from images or text prompts. It generates textures. On the free plan, you get the standard generator and low-resolution textures. Free models will be placed in a public library.

The service Introducing SGS-1 offers parametric geometry generation from an image or a 3D mesh. The generator can create CAD B-Rep components in STEP format.

Microsoft offers the Copilot 3D service, which generates 3D models from images. It works well with simple standalone images. You can also generate images in the same Copilot service. The company also released the neural network TRELLIS, which generates textured 3D models from images using voxel generation technology.

The Trellis model is also used by the TRELLIS 3D service alongside the Hunyuan models.

The ActionMesh model by Meta creates animated 3D models from an image or video.

The owner of the realistic skin texturing studio SYMBIOTE, the company Texturing.XYZ, has created a neural network for texturing SKAP, similar to the texturing neural network from YVO3D.

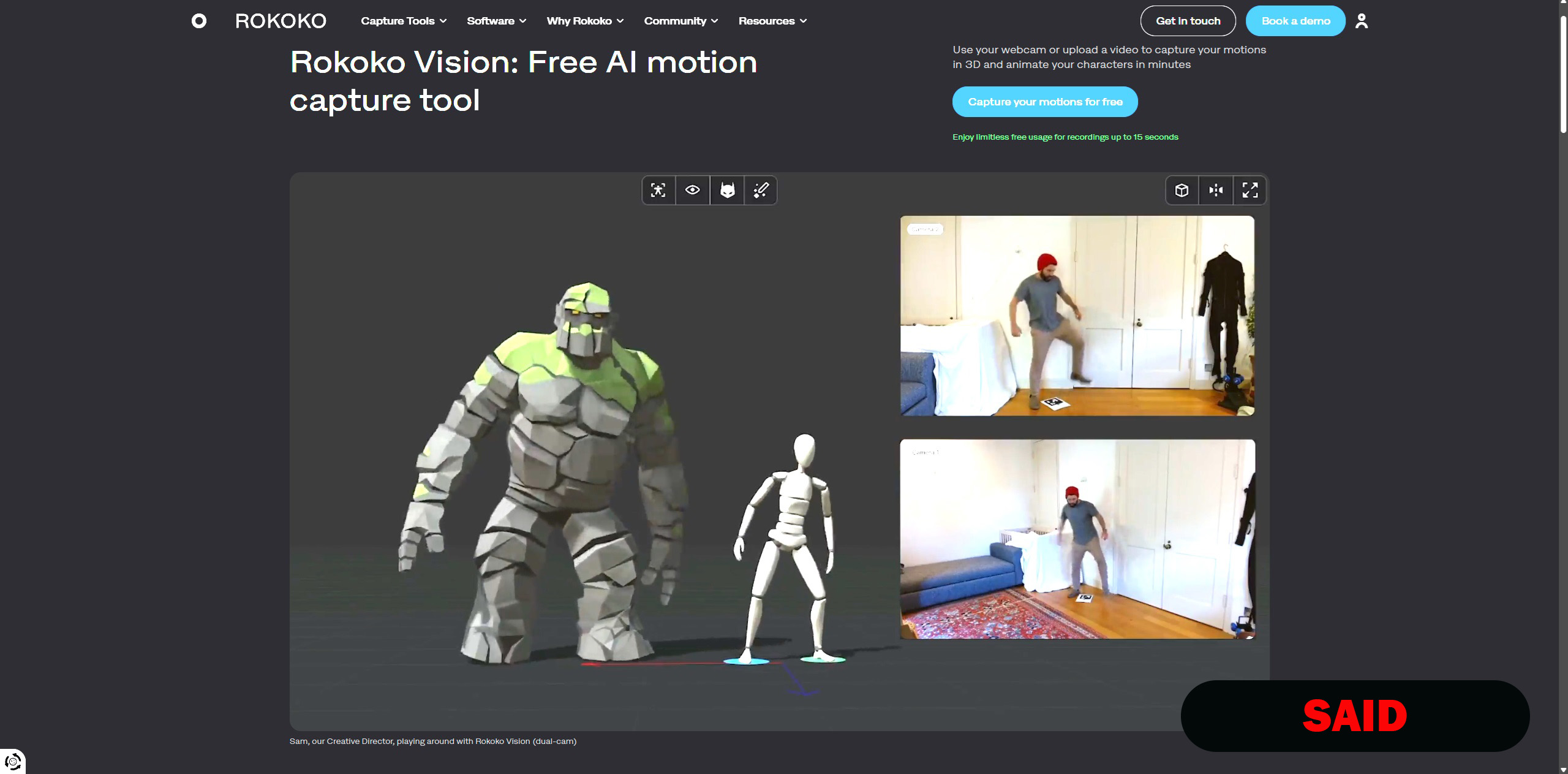

For professionals, consider the ROCOCO service, which includes a browser-based video motion capture application.

The online resource offers a 7-day trial version, during which you can capture motion using your phone or laptop camera and transfer it to rig bones. You can process up to 15 seconds of video without limits in the free trial version. The site has a decent video tutorial and a simple, detailed instruction guide. You need to register before starting, as the seven-day version begins immediately after registration. You can also download 263 free human motion mocap files made with this service, along with many other interesting free resources. It can be transferred to ACCURIG.

In the same field, but working a bit differently, is DEEPMOTION – a 3D animation generator for objects (people) from text and via video capture. The free plan allows you to create and export one character with two animations per month up to 10 seconds long. The free plan supports foot locking while walking to avoid sliding, motion smoothing, and physically correct joint filtering.

The service VISOID helps properly render 3D models and design scenes using neural network control over rendering. You can add images to the scenes, which will be visualized together with the 3D objects.

You can also visualize interiors and exteriors on the ReRenderAI platform. The convenient editor allows you to adjust room characteristics, furniture arrangement, and materials. You get 3 credits daily for 3 renders with a watermark for free.

The ZOHRAIZ-AI studio accepts orders for creating 3D models and completes them free of charge within 24 hours.

More motion capture services:

PLASK offers a 15-second daily limit, automatic camera and lighting, 1 GB of storage, video rendering, and export to FBX, GLB, BVH.

MOVE – a one-time 30 free seconds of animation;

RADICAL – 1 hour of animation and one FBX export for free;

REMOCAPP – 30 seconds per month free and 2 cameras;

QUICKMAGIC – with face and finger capture, 50 free credits per month.

REPLIKA is a 3D AI companion designed to help with stress and loneliness. You create a 3D character upon registration and can control it in a 3D environment (a pink room with a window and soothing music), clicking on objects your character can interact with, like playing a guitar. You get 15 gems and 400 coins for free, which you can use to customize the room and character. In the paid version, you can also talk to the AI by voice and fine-tune it to match your interests and preferences. You can discuss books, favorite movies, news, and other topics.

Another decent generative add-on for Blender is VIDEO-DEPTH-AI, which generates objects from images by creating a depth map.

There is also an option to work with Gaussian splats in Blender - you can watch this video on YouTube or other videos by this creator to learn how to install the necessary tools and use them.

The Edit-by-Track technology is designed for tracking and editing video segments and cameras based on points. The model considers all track correspondences (visible or hidden), allowing it to automatically handle visibility when editing 3D motion.

For the 3D editor RHINO, there is a plugin called RHINOAIMCP, which connects the editor to a neural network capable of generating models directly within the editor. It creates a local MCP server with two main tools: “get_scene,” which returns the geometric attributes of the current Rhino scene, and “rhinoceros_operator,” which performs tasks by requesting and executing C# scripts inside the editor.

You can also generate a depth map for Blender from an image using the neural network Distill-Any-Depth, and process it using the method shown in this video.

For developers, the author of a popular React library has created a wrapper called PLAYCANVAS REACT, built on top of the PLAYCANVAS engine. This library automatically creates and manages a scene with built-in physics, without the need to manually set up a canvas, as is typically required in Three.js or low-level graphics tools like the WebGL API.

The Skinned Multi-Person Linear Model (SMPL) technology and its newer version SMPL-X create a 3D human model capable of moving the body and facial muscles based on neural network training on 3D scans, images, as well as motion capture. Using this technology, researchers developed a system for reproducing fetal shape and pose in prenatal diagnostics.

A major video platform, MEDALTV, has created a lab called General Intuition, where it trains models for environments that require deep spatial and temporal reasoning.

Researchers from Seoul National University (South Korea) have developed the EgoX system, which generates first-person videos from third-person videos.

Scientists from the University of Massachusetts (USA) have developed a robot that assembles structures based on a text prompt. The system operates through the combination of a neural network that designs the assembly and a neural network that controls the robot. The process resembles 3D printing, but is faster.

Researchers at Google DeepMind have developed a 3D space generator called GENIE3, capable of creating interactive worlds and modifying them on demand.

Google DeepMind also introduced the spatial reasoning agent SIMA, which navigates spatial environments and can learn to play 3D games.

A similar spatial machine-orientation technology was introduced by ByteDance in their model Depth-Anything, which is trained using a "teacher-student" method and generates space based on visual evaluation of depth map geometry in an image.

Meta has developed the comprehensive system WORLDGEN for creating interactive and navigable three-dimensional worlds using a single text prompt. The system is built on a combination of procedural analysis, diffusion-based 3D generation, and object-oriented scene decomposition. The result is geometrically consistent, visually rich, and visualization-efficient three-dimensional worlds for games, simulations, and immersive social environments. 3D models SAM 3D make it possible to perform 3D reconstruction of objects and people from a single image.

The company WORLDLABSAI has introduced its 3D environment creation model MARBLE. The technology is based on rendering .spz files with the SPARK library, which works with WebGL. Exporting a 3D scene is possible in the form of Gaussian spots.

Developers from Skywork have presented an open diffusion model of a 3D world, MATRIX-GAME. The developers claim that Matrix-Game is an interactive world model that generates long videos "on the fly" using autoregressive diffusion, consisting of three key components: (1) a scalable data processing pipeline for Unreal Engine and GTA5 environments for efficient creation of large volumes (~1200 hours) of video data with diverse interaction annotations; (2) an action injection module that allows the use of mouse and keyboard input at the frame level as interactive conditions; (3) multi-step distillation based on a causal architecture for real-time video generation and streaming.

The real-time streaming video generation system MotionStream is being developed by the Adobe Research laboratory and Carnegie Mellon University (USA). The “teacher–student” system extracts and encodes 2D motion trajectories of objects and the camera from the input video, converting them into embeddings. These embeddings are combined with scene images and textual descriptions, and then fed into a bidirectional diffusion transformer. At this stage, the “teacher” neural network is trained to accurately reconstruct motion. The “student” neural network then learns from the teacher’s behavior and reproduces the process more quickly, enabling the system to generate long videos at a consistent speed and with low latency by simulating temporal extrapolation using an attention sink (attention stabilizer) and a kv-cache (a cache containing keys and values previously stored by the “student” network).

The company Odyssey has introduced an eponymous neural network capable of predicting future frames and generating interactive streaming video.

The popular generative video model developer Runway is training General World Model models to interact with the world. These are autoregressive models based on the powerful video generation model GEN . The GWM models, created in three variants, are planned to be combined into a single world model.

NVIDIA introduced the concept of real-time 3D video creation called GEN3C, implemented through spatial-temporal 3D cache technology. The developers claim that the neural network, after receiving images or videos and following the user's request for camera movement, generates diffuse images from different angles, creating 3D video. You can try this generation on the website by clicking the buttons. NVIDIA is developing the Universal Scene Description (OpenUSD) ecosystem — an intelligent environment generated by neural networks in real-time. The graphics chip manufacturer, which owns a solid 3D training environment for robot learning called COSMOS, has made a breakthrough in online 3D world generation. In addition, NVIDIA has launched the Audio2Face service, which allows animating 3D avatars’ faces in real time based on audio. At the same time, the NITROGEN model for gameplay is being improved, trained on 40,000 hours of gameplay video recordings from more than 1,000 games.

The diffusion transformer-based video world generator OASIS from the Israeli startup DECARTAI is capable of creating interactive video as well as modifying streaming in real time. The application is installed on a PC and is mainly used together with the popular game Minecraft to alter the game world. An online demo version is available on the website.

The creation of animated 4D avatars (CAP4D) using morphable multi-view diffusion models was developed by scientists from the Toronto institute together with colleagues. The model takes one or several images as input and in real time computes conditioning signals from 3DMM (3D Morphable Model) — such as head pose, facial expression, and camera direction. At the same time, the technique Gaussian Splatting / Gaussian Avatars is used — Gaussian primitives are placed in 3D space, which can be deformed.

Tencent has developed the framework YAN for real-time video generation. Thanks to three main modules (high-precision simulation, multimodal generation, and multi-grain editing), YAN implements the first full pipeline “input command → real-time generation → dynamic editing” in creating interactive video. Unlike the above-mentioned HUNYUAN-GAMECRAFT, this model is intended for higher-quality rendering of space with strong interactivity. It is likely that the company’s development of the video diffusion framework VOYAGER is also related to the implementation of the general concept of online generation of 3D-spaces.

The spatial modeling neural network KALEIDO by the Chinese brand Shikun, in contrast to traditional methods that require optimization for each scene or are limited to specific categories of objects, uses a sequence-to-sequence architecture to build a comprehensive understanding of three-dimensional space based on various video and 3D data. This allows Kaleido to visualize new objects from scratch, generating realistic visualizations of both individual objects and complex environments without any fine-tuning for each scene.

An interactive visualization of the world is created by the neural network in the PAN project. The system recreates videos of important moments of accidents and other dangerous events, which allows training neural networks on such videos to anticipate dangerous situations in advance and either prevent them or minimize the consequences.

The open-source world simulator LingBot-World is offered by Robbyant, a company that develops neural networks for robotics.

The GAMEBOAI service offers the creation of 2D games by a neural network based on a text prompt. After registration, the user receives a generated project package with the code for deploying the game. The game can be immediately published and played. Typically, it is a standard platformer. You can generate your own game from a prompt or edit an existing one.

It is also worth paying attention to the 3D scanning and spatial image generation technology known as Gaussian Splatting. This technology is based on LiDAR scanning and panoramic photography. Instead of polygonal meshes, it uses millions of semi-transparent colored points (splats), allowing for photorealistic scene rendering. The technology is implemented in services such as Polycam and LumaAI. The DOGRECON project, currently in development, creates animated dogs from a single image. Services that support 3D scanning based on geometry, without using Gaussian Splatting, include: 3DF Zephyr, Scaniverse, Kiri Engine, and RealityScan.

Work with the technology of building 3D scenes from point clouds based on images can be carried out in the Postshot editor, followed by processing in the three-dimensional engine of the TouchDesigner application editor.

I don’t know how popular exactly 3D generators are nowadays, since queues for video generation are tens of times longer, but they still help me quite often in my work. Creating a character – a little dragon, a smurf, or a monster – that used to take two or three days, now takes just one hour: base mesh with forms – retopo – textures – rig – skinning – done.

But as far as I know, many still prefer to work by hand. Several generators that seemed promising last year are not available online today. If some links don’t work – it means the teams decided to move their resources to the more popular field of video generation.

And we continue working in 3D, to keep delivering beauty made by hand, but now with the help of AI.

Take the SAID test to once again make sure that AI is not capable of deceiving us.

said-correspondent🌐

Discussion in the topic with the same name in the community.